The objective of this research is to explore the state-of-the-art learning control techniques to increase the intelligence and autonomy of UAVs.

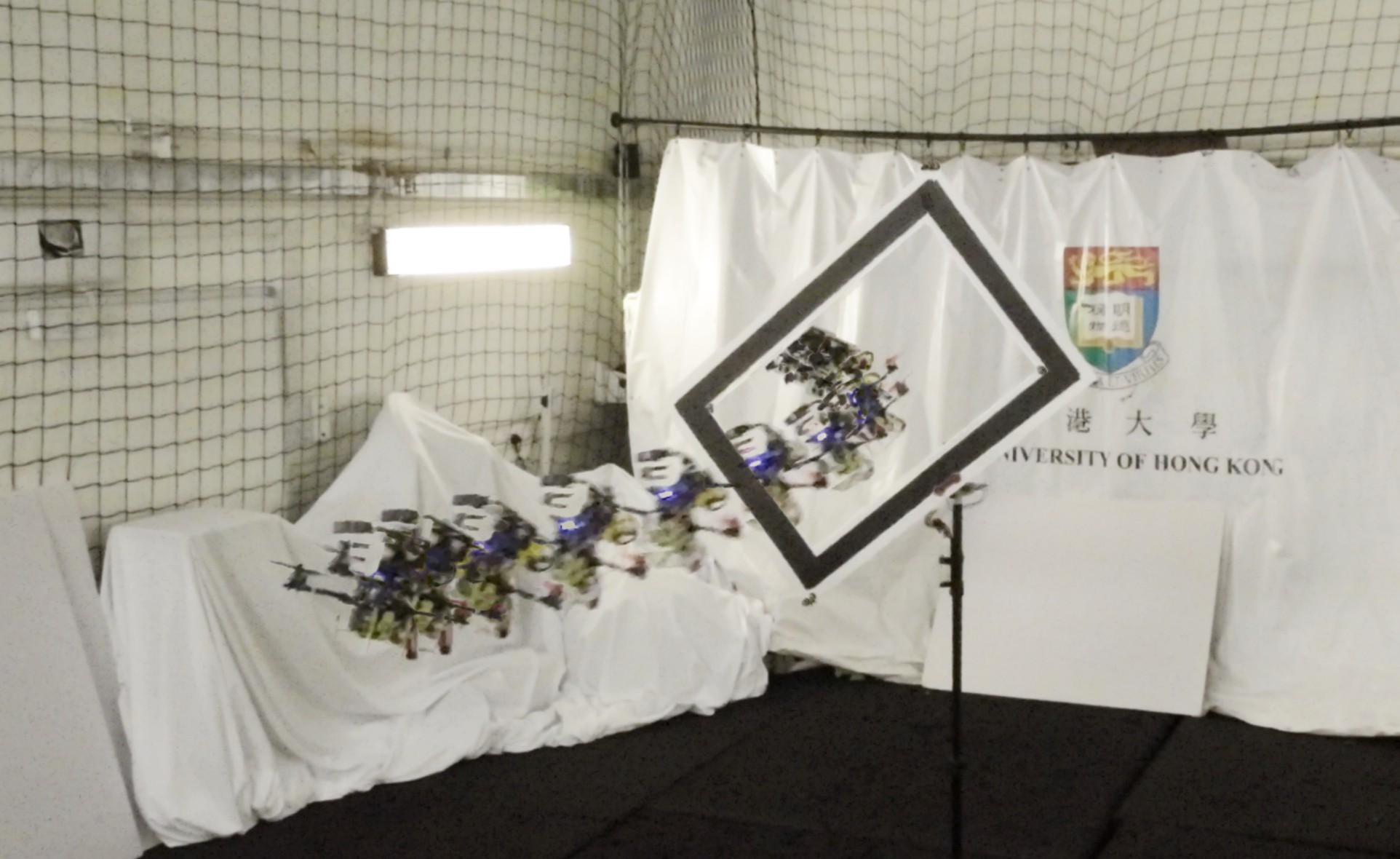

Aggressive flights (passing through a narrow gap in this case) can be difficult to achieve using conventional control techniques. By RL, we demonstrate that the UAV is able to plan its motion optimally to pass through the gap.

Example research

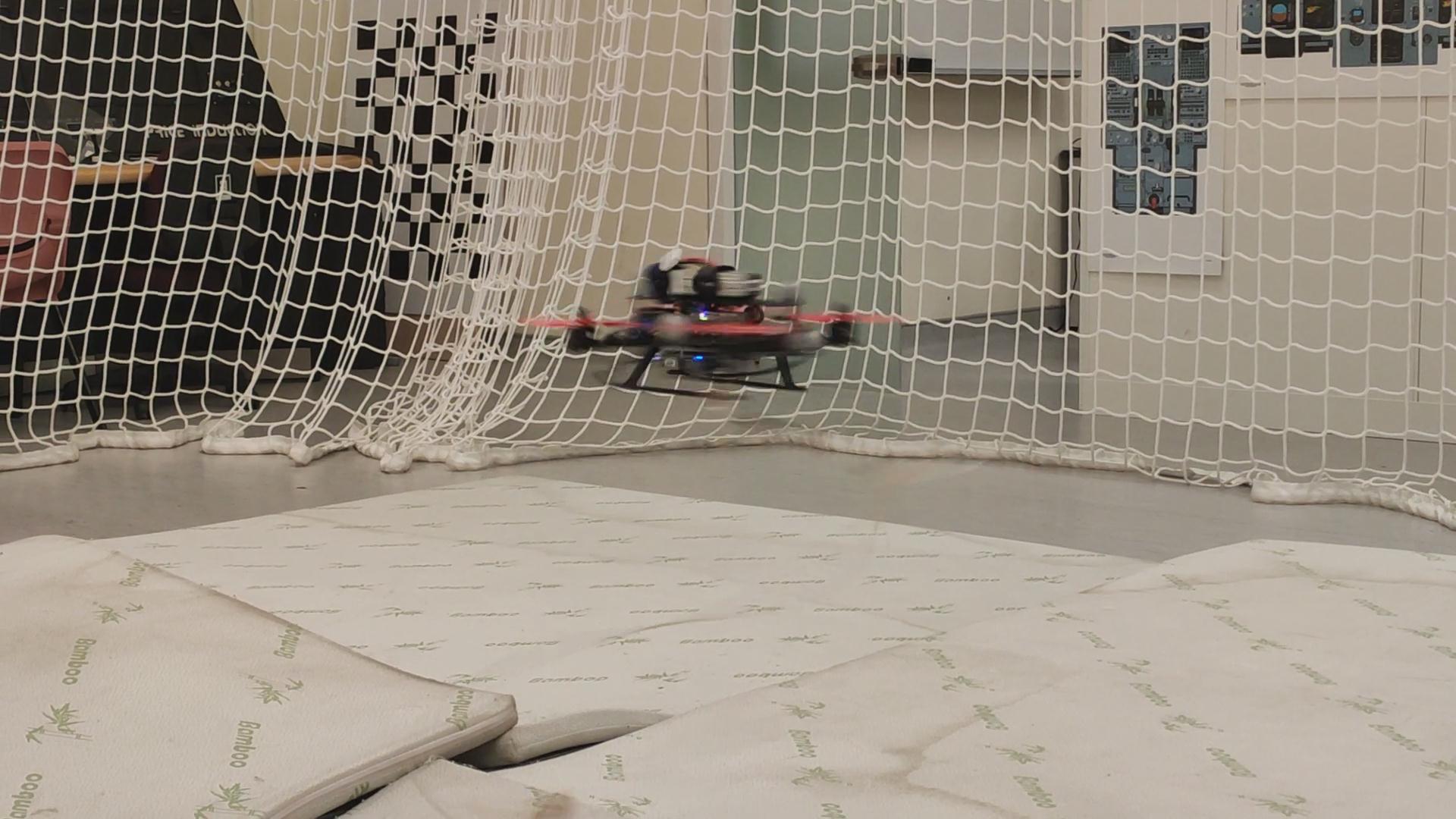

Motion planning and obstacle avoidance for UAVs

This

research mainly focuses on developing the real-time motion planning

and control techniques for UAVs to achieve certain tasks.

This

research mainly focuses on developing the real-time motion planning

and control techniques for UAVs to achieve certain tasks. Research topics include but are not limited to:

Motion generation and planning

Robust and adaptive control

Obstacle avoidance

Path planning

Example research

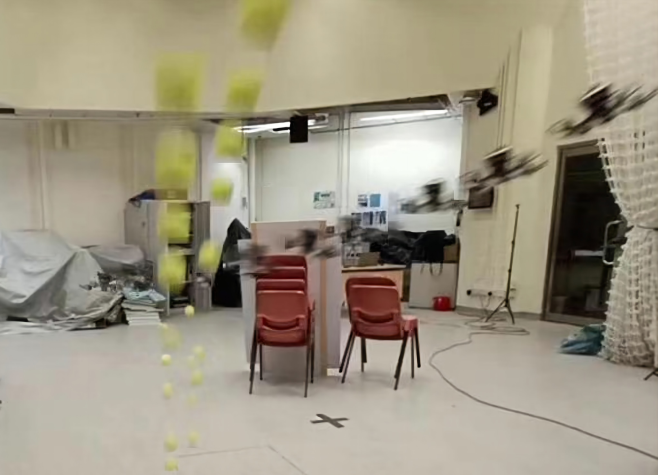

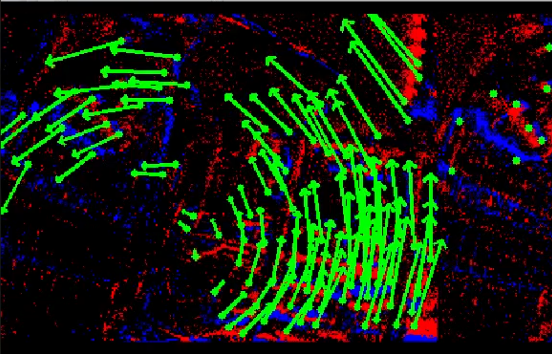

Vision Odometry and SLAM for UAVs

The main goal is to increase the autonomy of UAVs by exploring state-of-the-art vision and control techniques. Vision module detects and tracks objects while the control module executes the command to achieve certain tasks. Topics include navigation in a complex environment, object detection and tracking and obstacle avoidance.

Example research

Fault-Tolerant Control techniques for UAVs

The objective of this research is to increase the safety of the UAVs especially when the UAV is used in outdoor tasks.

The failures of the UAV have to detected in real-time using fault detection techniques and then the failure information is used to reconfigure the controller to maintain the safe flight even in the presence of failures.

Example research

Robust Control/Fault-Tolerant Control techniques for fixed-wing aircraft

This research was based on the Cessna Citation II aircraft owned by TU Delft and NLR. Modern control techniques are implemented on this model to test their tracking as well as fault-tolerance performances.